Analogy Time

Consider something like automobile production in the United States. It stands at about 12,000,000 cars per year. If 99.9% came off the assembly line in perfect condition and ready-to-drive that would look pretty good on paper.

Except, perhaps, for the large parking lot outside the assembly plant that would be required to store the bad cars; with 1/10th of 1% of the production failing, 12,000 bright, shiny, brand new cars would be sitting out in the lot. Better plan on having about 250 parking places ready at all times. At least the mechanics and technicians would have permanent jobs.

Microchips

For the average person looking at a tiny microchip, there’s very little to see. It’s a mysterious black box with “magic” inside. Show them a whole circuit board and suddenly they imagine an aerial view of a cityscape; a burgeoning metropolis with millions of residents and insane complexity. If you were to take a look inside a microchip, you would also see a city with roads of aluminum and copper busily running atop the surface of the silicon die, layer upon layer of them. They make the worst interstate exchange in LA look like a country road.

For the average person looking at a tiny microchip, there’s very little to see. It’s a mysterious black box with “magic” inside. Show them a whole circuit board and suddenly they imagine an aerial view of a cityscape; a burgeoning metropolis with millions of residents and insane complexity. If you were to take a look inside a microchip, you would also see a city with roads of aluminum and copper busily running atop the surface of the silicon die, layer upon layer of them. They make the worst interstate exchange in LA look like a country road.

Integrated circuits (ICs) are extremely complex and would not exist as they do today if not the continued improvements in computers and software. Most ICs these days are packaged (molded) in an epoxy (the “black box” we see) that protects the IC while providing electrical paths from the IC to the outside world. Electrical testing of the ICs ensures they are functional before they’re shipped, but like all things in nature, they will degrade with time.

Although many of the causes of failure, the failure mechanisms, are associated with the IC itself, these failure mechanisms typically do not manifest themselves till much later (10-20 years or longer) in the IC’s lifetime. The packaging of the part though also introduces possible failure mechanisms, many of which will decrease the lifetime of the product to 1-3 years. These include voids in the epoxy (which lead to uneven stresses in the package), and delamination of the plastic to the IC die or the leadframe (which also causes uneven stresses within the package and can be an accumulation area for moisture or other contaminants.

Uneven stresses, or stress gradients, are fairly neutral at a constant ambient temperature, but when the device temperature increases (from self-heating and/or external temperature increases), the plastic encapsulant (the package) expands more than the IC die in the package. A uniform encapsulant will put uniform stress on the die, but if there is an area of delamination or a void over the IC die or a wire bond, it can lead to a cracked die or broken wire bond.

If the delamination is not over the die, it may be acceptable if it doesn’t extend to the outside of the package (which would let contaminants onto the die). When these types of delaminations exist, accelerated stress, such as temperature cycling, should be used to ensure delaminations do not “grow” and reach the exterior of the package.

In some surface-mount packages, delamination under the leadframe has been known to accumulate water vapor. During the soldering process to the board, this water vapor turns to steam, stressing the package to fracture. This is known as “popcorn cracking”. But which packages have a problem and which are good?

SAM to the Rescue

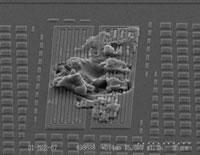

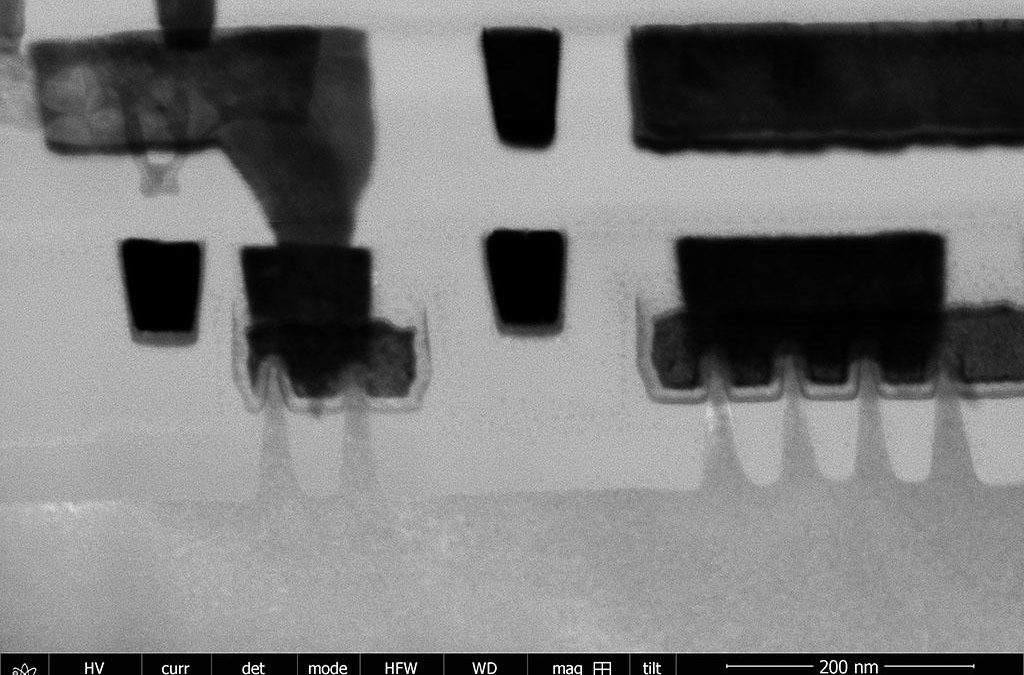

How does one prevent these package problems? First, process controls must be in place in the manufacturing line to ensure the process produces the same high-quality product each time. To check to see if the present product is free of these unacceptable flaws, Scanning Acoustic Microscopy (SAM) is utilized. While X-ray inspections image differences in a materials’ densities in a package, SAM uses sound waves to image the inside of the package. This is much like the use of ultrasounds in a hospital to imaging soft tissue (such as babies in the womb or hearts, which X-rays pass through without a clear image). As such, SAM is an excellent complement to X-rays and is considered non-destructive.

As part of an IC’s qualification, the package should be inspected for voids and delaminations in the package. SAM is normally performed on the first product produced providing a baseline for the device. After this initial testing, the samples are normally subjected to temperature cycles, and then SAM tested again to see if the baseline data changed. This cycle of Scanning Acoustic Microscopy inspections and temperature cycling is often repeated until the integrity of the package is demonstrated (or adversely, is shown to have a problem).

Since SAM systems rely on sound waves to collect the data about the inside of a package, the frequency of the sound wave plays an important role. High-frequency waves provide the most resolution, but cannot penetrate deep into the sample. Conversely, low-frequencies can penetrate even through the sample (through transmission) but do not afford the resolution of the high-frequency transducers. As such, the selection of the appropriate transducer will have a strong effect on the acoustic image and the information it provides.

There are different SAM manufacturers and models available and they all have their good points. Your choice depends on your needs. To continue the car analogy, one type doesn’t suit all—you don’t need a pickup truck to deliver jewelry, and you can’t use a Tesla to take five kids to soccer practice.

The Many Modes of SAM

If only naval sonar was as accurate as SAM! All the different scanning methods could provide insights that would otherwise be impossible to obtain. Of course, SAM is not the exclusive domain of the microelectronics industry.

The medical field has found uses for it to study biological cells and tissues for structure and motility. They have even used it to determine the efficacy of needle-less injections demonstrated by “Dr. Leonard McCoy” from the first Star Trek series way back in the 1960s. We actually have working models of that device now. Fascinating…

For our purposes, however, there are several very prevalent SAM modes and they reveal different aspects of problems. Typical modes that all the equipment vendors offer are:

- A-scan: The C-scan data is arranged versus a horizontal axis of time. This mode is very helpful in identifying the location of the void or delamination in a package.

- B-scan: This is a cross-sectional view of the sample in a single plane.

- C-scan: A 2-dimensional image created from the returned waveforms (pulse-echo mode) of the transducer.

Using an acoustic transducer, a wave front passes into the object of interest, which is typically in a water tank; the water couples the sound energy from the transducer to the sample. Variances in the sound conduction ability of the internal materials reflect the energy back to the transducer. Any “air gaps” in the package will reflect the entire sound wave, highlighting a potential problem. Anomalies are quickly identified and can be used for further studies and feedback to production.

For example, the delaminations between the packaging and the chip are a must-know item, but knowing where they occur is equally important. Does it occur at the die/adhesive level (die attach) or the adhesive/leadframe level? An A-scan (much like a cross-section) reveals diagnostic detail not clearly shown by other methods. In the included acoustic image, the C-scan is shown on the top for reference, which shows an area of delamination. The white line is the point of the cross-section shown, the A-scan in the bottom half of the photograph. Clearly, there is delamination at the die/adhesive level, but it also occurs at the adhesive/leadframe. You must solve both problems or you solve neither!

Sonoscan C-SAM®

Consider the FACTS²™ DF2400™ C-SAM® (by SONOSCAN) unit which can image with a precision of ± 0.5 microns using multiple scan heads. It can improve in-production throughput between 2 and 7 times over earlier units. It can handle Auer Boat carriers, JEDEC trays or even IGBT modules.

If you’re using very small parts, an optional vacuum hold-down assures high-quality images. The waterfall/plume transducers eliminate immersion to prevent water ingress (if that is a concern), plus it eliminates “floating” for additional image quality. (Want to see it work? Click the caption under this image to see the DF2300™ in action).

Sonix SAM

This company has been busy innovating since 1986, and pioneering breakthroughs for accurate imaging; they’ve often led the industry by finding solutions that eluded others.

This company has been busy innovating since 1986, and pioneering breakthroughs for accurate imaging; they’ve often led the industry by finding solutions that eluded others.

In a further example of an in-line, fully-automated scanner (by Sonix), we see that it offers similar capabilities to the Sonoscan C-SAM, but Sonix is particularly proud of its over-sampling, anti-aliasing capabilities. Aliasing occurs when there isn’t enough overlap on the readings such that images begin to look blocky. Fine detail can be lost, especially as components continue to diminish in size.

You may have experienced this with textures in video games, for example. This screen-grab obtained from a video game shows the effect of whether anti-aliasing technology is off (left half) or on (right half). The smoothing isn’t an artifact—it is an actual increase in detail—and that helps us spot problems.

With MUF (Molded Under-Fill) for flip-chips, you decrease manufacturing costs; however, the epoxy is not nearly as transparent as silicon and can have particles that give false positives to SAMs. Sonix’s MFCI™ software compensates for spatial and edge resolution, as well as enhancing contrast. If you were thinking that MUF was an artificial economy, it’s not—and Sonix may have your solution.

OKOS SAM

This company is comfortable dealing with NDT (nondestructive testing) on just about all of the semiconductor packages you can imagine, even wafers, motherboards, LED/LCD panels, flip-chips, or plastic packages. They too have exclusive software (called WinSam®), that works in combination with their special transducers and custom digitizers. They can handle just about every type of scan from “A” to “3D”.

They offer different models of SAMs such as the 250-P, whose smaller size is perfectly suited to laboratory work; the 400-P in-line model that will suit a production facility; or the granddaddy of them all, the Macrovue 1000. Complemented by their less bulky transducer/receivers, they significantly increase the scanning resolution capability for hybrid and other emerging technologies.

They even offer training refreshers for scanning techniques, starter classes for your team, or advanced training courses. You can contact them for training subjects offered, and pricing.

The Takeaway

There is no definitive winner in these, or any of the other models, available nowadays. It is so highly dependent on your own needs. Every unit will have its own niche. Your best choice is always to investigate with the manufacturers themselves.

Send them samples to be tested (usually for free!) to see what kind of results they can achieve for you. Let their skilled people put their own machines through their paces so you can see what the maximum capacity is. With appropriate training, whatever they can do, you can accomplish, too.

Or if you just have a now-and-then need, contact Spirit Electronics and talk to their experts about acoustic microscopy. They can run your samples and help interpret the data while providing quick turn times.

For the average person looking at a tiny microchip, there’s very little to see. It’s a mysterious black box with “magic” inside. Show them a whole circuit board and suddenly they imagine an aerial view of a cityscape; a burgeoning metropolis with millions of residents and insane complexity. If you were to take a look inside a microchip, you would also see a city with roads of aluminum and copper busily running atop the surface of the silicon die, layer upon layer of them. They make the worst interstate exchange in LA look like a country road.

For the average person looking at a tiny microchip, there’s very little to see. It’s a mysterious black box with “magic” inside. Show them a whole circuit board and suddenly they imagine an aerial view of a cityscape; a burgeoning metropolis with millions of residents and insane complexity. If you were to take a look inside a microchip, you would also see a city with roads of aluminum and copper busily running atop the surface of the silicon die, layer upon layer of them. They make the worst interstate exchange in LA look like a country road.

This company has been busy innovating since 1986, and pioneering breakthroughs for accurate imaging; they’ve often led the industry by finding solutions that eluded others.

This company has been busy innovating since 1986, and pioneering breakthroughs for accurate imaging; they’ve often led the industry by finding solutions that eluded others.